This article may as well be part of a series: 1 2 (read this first)

A note on notation: We use the abbreviation “simple^3” in this post to refer to simple simple simple sliding block puzzles.

Warning: This article contains original research! (It’s also more papery than most of the other articles on this site.)

In the previous post I mentioned several methods that could be used to speed up the finding and exhaustive searching of sliding block puzzles, as well as a candidate for the ‘hardest’ one-piece-goal diagonal-traverse 4×4 sliding block puzzle. I am pleased to say that the various bugs have been worked out of the sliding block puzzle searcher, and that 132 has been confirmed to be the maximum number of moves for a simple simple simple 4×4 sliding block puzzle with no internal walls!

(that’s not a lot of qualifiers at all)

As the reader may expect, this blog post will report the main results from the 4×4 search. Additionally, I’ll go over precisely how the algorithms very briefly mentioned in the previous post work, and some estimated running times for various methods. Following that, various metrics for determining the difficulty of a SBP will be discussed, and a new metric will be introduced which, while it is slow and tricky to implement, is expected to give results actually corresponding to the difficulty as measured by a human.

Search Results

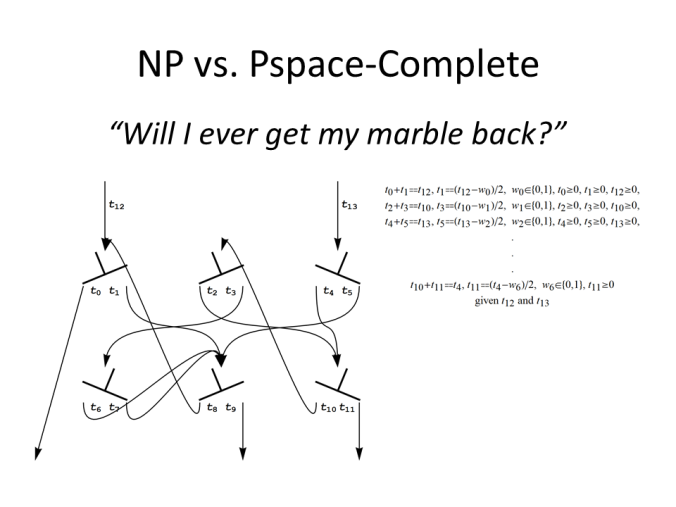

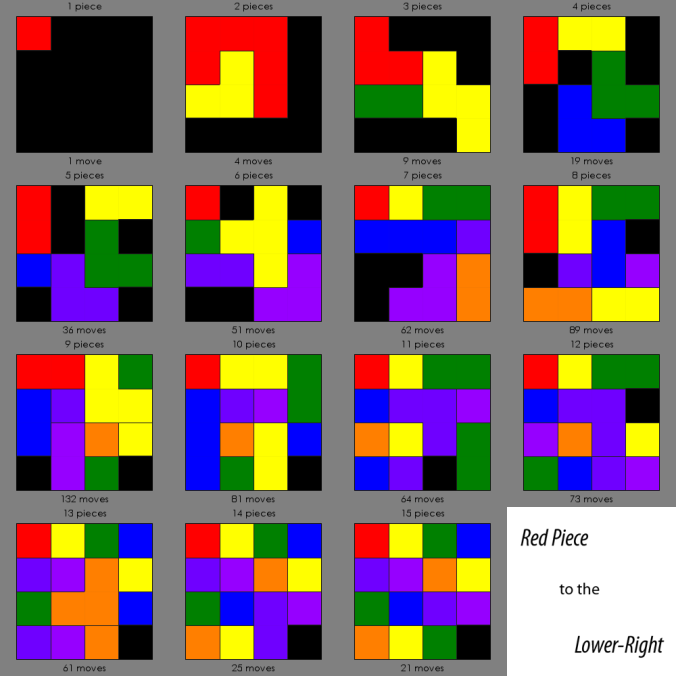

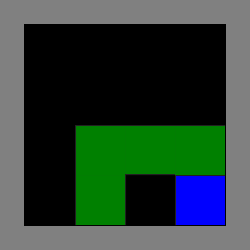

Running SBPSearcher on all the 4x4s takes about 3 hours (processing 12295564 puzzles), which was quite useful for fixing hidden bugs in the search algorithm. Here are the best simple^3 4×4 n-piece SBPs, where n goes from 1 to 15:

Click to view in full size

And for comparison, the move counts vs. the previously reported move counts:

1 4 9 19 36 51 62 89 132 81 64 73 61 25 21

1 4 9 24 36 52 68 90 132 81 64 73 61 25 21

Notice that all the entries in the top row of the table are either less than or equal to their respective entry in the bottom row of the table, some (such as in the case of p=7 or p=4) being much less. This is because the previous search (run, in fact, as a subfeature in the sliding block puzzle evolver) started with all possible positions and defined the goal piece to be the first piece encountered going left to right, top to bottom, across the board. As such, the search included both all the 4×4 simple^3 sliding block puzzles as well as a peculiar subclass of sliding block puzzles where the upper-left square is empty but the goal piece is often a single square away! This accounts for the 6-piece case and the 8-piece case (in which the first move is to move the goal piece to the upper-left), but what about the other two cases?

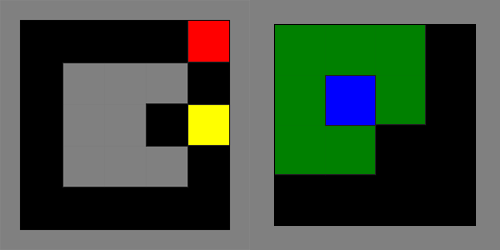

For the 4-piece case, the originally reported puzzle (see here for the whole list) wasn’t simple^3, and it isn’t possible to convert it into a simple^3 SBP by shifting blocks in less than 5 moves. Interestingly, the new ‘best’ puzzle for 4 pieces is just James Stephens’ Simplicity with a few blocks shifted, a different goal piece, and a different goal position! (There could be copyright issues, so unless stated, the puzzles in this article are public domain except for the one in the upper-right corner of this image) Additionally, the 7-piece 68-move puzzle in the previous article is impossible to solve! The upper-left triomino should be flipped vertically in place. I expect this to have been a typing error, but the question still stands: Why is there a 6-move difference?

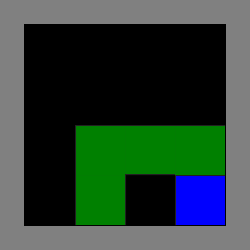

As mentioned before, the actual stating of what constitutes a simple simple simple puzzle is as such: “A sliding block puzzle where the piece to be moved to the goal is in the upper-left corner, and the goal is to move the goal piece to the lower-right corner of the board”. Unfortunately, there’s some ambiguity as to when a piece is in the lower-right corner of the board – is it when the lower-right cell is taken up by a cell in the goal piece, or is it when the goal piece is as far to the lower-right as it can be? If we take the latter to be the definition, then additional ambiguities pop up when faced with certain problems, such as the following one often encountered by SBPSearcher:

Which piece has just moved into the lower-right?

Because of problems like these, SBPSearcher uses the former definition, which means that puzzles where the goal piece takes the shape of an R aren’t processed. (In actuality, it’s SBPFinder that does this checking, when it checks if a puzzle is in the ‘justsolved’ state). If we say that the first definition is stricter than the second, then it could be said that SBPSearcher searched only through the “Strict simple simple simple 4×4 Sliding Block Puzzles”. While I don’t think that any of the results would change other than the p=7 case, it would probably be worth it to modify a version of SBPSearcher so that it works with non-strict simple simple simple sliding block puzzles.

A few last interesting things: The 13-piece case appears to be almost exactly the same as the Time puzzle, listed in Hordern’s book as D50-59! (See also its entry in Rob Stegmann’s collection) Additionally, the same split-a-piece-and-rearrange-the-pieces-gives-you-extra-moves effect is still present, if only because of the chosen metric.

The Algorithm, In Slightly More Detail Than Last Time

There are only two ‘neat’ algorithms contained in the entire SBP collection of programs, these two in SBPFinder and SBPSearcher respectively. The first of them is used to find all possible sliding block puzzle ‘justsolved’ positions that fit in an NxM grid, and runs in at most O(2*3^(N+M-2)*4^((N-1)*(M-1))) time. (Empirical guess; Due to the nature of the algorithm, the calculation of the running time is probably very hairy).

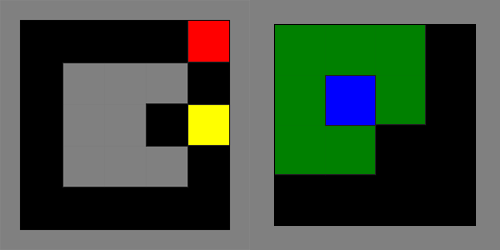

First of all, the grid is numbered like this:

0 1 3 6 10

2 4 7 11 15

5 8 12 16 19

9 13 17 20 22

14 18 21 23 24

where the numbers increase going from top-right to lower-left, and moving to the next column over every time the path hits the left or bottom edges. (Technical note: For square grids, the formula x+y<N?TriangleNumber(x + y + 1) – x- 1:N*M – TriangleNumber(N + M- x – y – 1) + N – x – 1 should generate this array)

Then, the algorithm starts at cell #0, and does the following:

Given a partially filled board: (at cell 0 this board is all holes)

- Take all the values of the cells from (cell_number-1) to the number of the cell to the left of me, and put that in list A.

- Remove all duplicates from A.

- If the following values do not exist in A, add them: 0 (aka a hole) and the smallest value between 1 and 4 inclusive that does not exist in A (if there is no smallest value between 1 and 4, don’t add anything except the 0)

- Run fillboard (that is, the current function), giving it cell_number+1 and the board given to this level of the function with the value of cell_number changed to n, for each value n in A.

However, if the board is all filled (i.e, cell_number=25) , check to see that the board has holes and that it is justsolved, and if so, standardize the piece numbering and make sure that you haven’t encountered it before, and if so, sort the board into the appropriate piece number “box”.

Once the fillboard algorithm finishes, you should have N*M-1 boxes with all the possible justsolved positions that can be made on an N*M grid! There are a number of other possible methods that do the same thing- all it basically needs to do is generate all possible ways that pieces fitting in an NxM grid can be placed in a grid of the same size.

For example, you could potentially go through all possible 5-colorings of the grid (4-colors and holes), and remove duplicates, but that would take O(5^(N*M)) time, which isn’t the most feasible option for even a 4×4 grid. You could also proceed in a way that would generate the results for the next number of pieces based on the results of the current number of pieces and all possible rotations and positions of a single piece in an NxM grid by seeing which pieces can be added to the results of the current stage, but that would take O(single_piece_results*all_justsolved_results). While that number may seem small, taking into account the actual numbers for a 4×4 grid (single_piece_results=11505 and all_justsolved_results=12295564) reveals the expected running time to be about the same as the slow 5-coloring method. However, it may be possible to speed up this method using various tricks of reducing which pieces need to be checked as to if they can be added. Lastly, one could go through all possibilities of edges separating pieces, and then figuring out which shapes are holes. The time for this would be somewhere between O(2^(3NM-N-M)) and O(2^(2NM-N-M)), the first being clearly infeasible and the second being much too plausible for a 4×4 grid.

In practice, the fillboard algorithm needs to check about 1.5 times the estimated number of boards to make sure it hasn’t found them before, resulting in about half a billion hash table lookups for a 4×4 grid.

The second algorithm, which SBPSearcher is almost completely composed of, is much simpler! Starting from a list of all justsolved puzzles (which can be generated by the fillboard algorithm), the following is run for each board in the list:

- Run a diameter search from the current puzzle to find which other positions in the current puzzle’s graph have the goal piece in the same position;

- Remove the results from step 1 from the list;

- Run another diameter search from all the positions from step 1 (i.e consider all positions from step 1 to be 0 moves away from the start and work from there), and return the last position found where the goal piece is in the upper-left.

Step 2 is really where the speedup happens- Because each puzzle has a graph of positions that can be reached from it, and some of these positions are also in the big list of puzzles to be processed, you can find the puzzle furthest away from any of the goal positions by just searching away from them. Then, because the entire group has been solved, you don’t need to solve the group again for each of the other goal positions in it and those can be removed from the list. For a 4×4 board, the search can be done in 3 hours, 27 minutes on one thread on a computer with a Core I7-2600 @3.4 Ghz and a reasonable amount of memory. In total, the entire thing, finding puzzles and searching through all of the results, can be done in about 4 hours.

Of course, where there are algorithms, there are also problems that mess up the algorithms- for example, how would it be possible to modify SBPSearcher’s algorithm to do, say, simple simple puzzles? Or, is it possible to have the fillboard algorithm work with boards with internal walls or boards in weird shapes, or does it need to choose where the walls are? An interesting thing that would seem to point that the answer to the first question might be yes is that to find the pair of points furthest apart in a graph (which would be equivalent to finding the hardest compound SBP in a graph) requires only 2 diameter searches! Basically, you start with any point in the graph, then find the point furthest away from that, and let it be your new point. Then, find the point furthest away from your new point, and the two points, the one you’ve just found and the one before that, are the two points farthest away from each other. (See “Wennmacker’s Gadget”, page 98-99 and 7 in Ivan Moscovich’s “The Monty Hall Problem and Other Puzzles”)

Metrics

a.k.a. redefining the problem

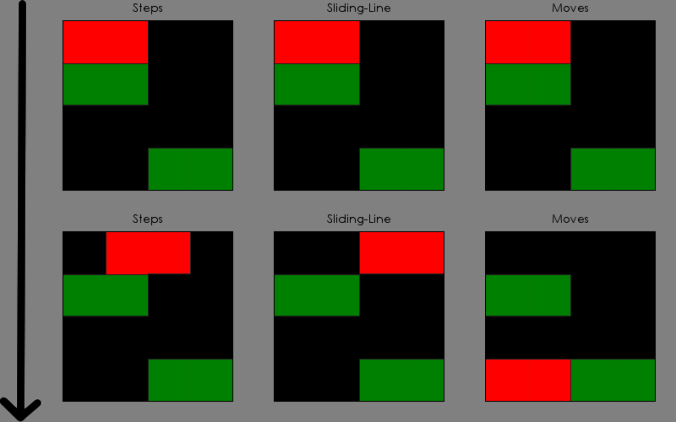

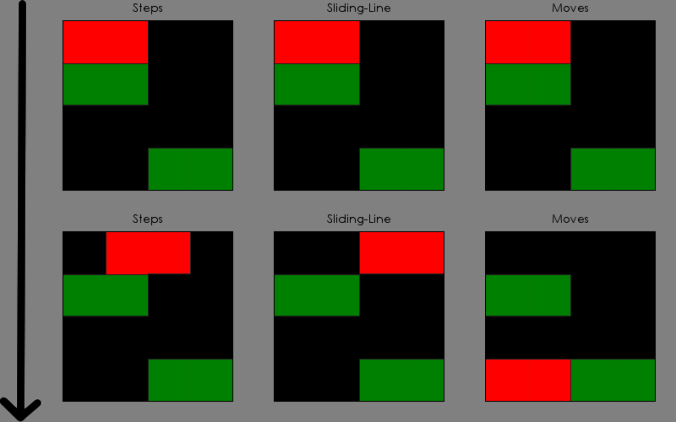

Through the last 3 posts on sliding block puzzles, I have usually used the “Moves” metric for defining how hard a puzzle is. Just to be clear, an action in the Moves metric is defined as sliding a single piece to another location by a sequence of steps to the left, right, up and down, making sure not to pass through any other pieces or invoke any other quantum phenomena along the way. (The jury is out as to if sliding at the speed of light is allowed). While the majority of solvers use the Moves metric (my SBPSolver, Jimslide, KlotskiSolver, etc.), there are many other metrics for giving an approximation to the difficulty of a sliding block puzzle, such as the Steps metric, and the not-widely used sliding-line metric. The Steps metric is defined as just that- an action (or ‘step’) is sliding a single piece a single unit up, down, left, or right. The sliding-line metric is similar: an action is sliding a single piece any distance in a straight line up, down, left or right. So far as I know, only Analogbit’s online solver and the earliest version of my SBPSolver used the steps metric, and only Donald Knuth’s “SLIDING” program has support for the sliding-line metric. (It also has support for all the kinds of metric presented in this post except for the BB metrics!)

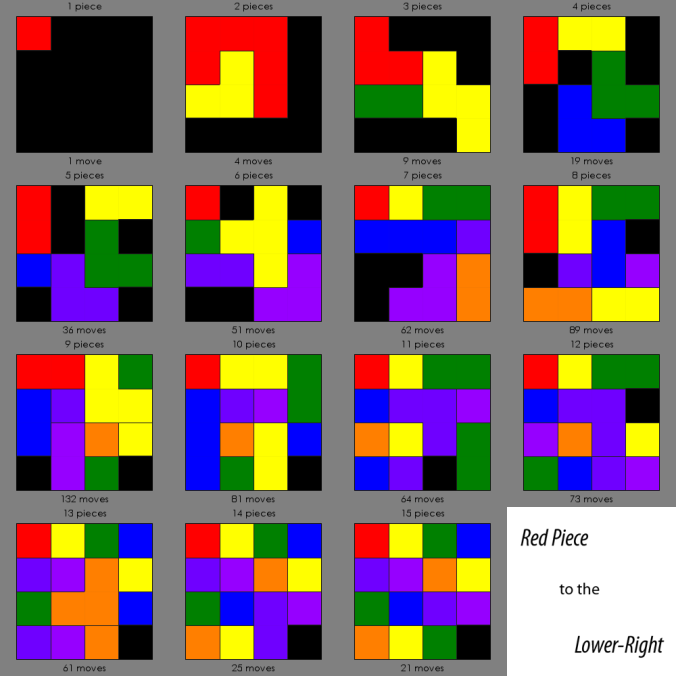

Demonstration of various metrics

Additionally, each of the 3 metrics described above has another version which has the same constraints but can move multiple pieces at a time in the same direction(s). For example, a ‘supermove’ version of the Steps metric would allow you to slide any set of pieces one square in any one direction. (As far as I know, only Donald Knuth’s SLIDING program and Soft Qui Peut’s SBPSolver have support for any of the supermove metrics) In total, combining the supermove metrics with the normal metrics, there are 6 different metrics and thus 6 different ways to express the difficulty of a puzzle as a number. Note however, that a difficulty in one metric can’t be converted into another, which means that for completeness when presenting results you need to solve each sliding block puzzle 6 different ways! Even worse, the solving paths in different metrics need not be the same!

For example, in the left part of the image above, where the goal is to get the red piece to the lower-right corner, the Moves metric would report 1, and the red piece would go around the left side of the large block in the center. However, the Steps metric would report 5, by moving the yellow block left and then the red block down 4 times. Also, in the right picture both the Moves and Steps metrics would report ∞, because the green block can’t be moved without intersecting with the blue, and the blue block can’t be moved without intersecting with the green, but any of the Supermove metrics would report a finite number by moving both blocks at once!

Various other metrics can be proposed, some with other restrictions (You may not move a 1×1 next to a triomino, etc.), some which, like the Supermove metrics and the second puzzle above, actually change the way the pieces move, and you can eventually get to the point where it’s hard to call the puzzle a sliding block puzzle anymore. (For example, Dries de Clerq’s “Flying Block Puzzles” can be written as a metric: “An action is defined as a move or rotation of one piece to another location. Pieces may pass through each other while moving, but only one piece may be moved at a time”.

Suppose, however, that for now we’re purist and only allow metrics which generate numbers based on the step, sliding-line, moves, super-step, super-sliding-line, and super-moves metrics. It can be seen , quite obviously in fact, that these metrics don’t in all cases show the actual difficulty of the puzzle being considered. For example, take a very large (say 128×128) grid, and add a 126×126 wall in the center. Fill the moat that forms with 507 1×1 cells, all different pieces, and make the problem be to bring the block in the upper-left down to the lower-right. If my calculations are correct, the resulting problem should take 254*507+254=129,032 steps, sliding-line actions, and moves to solve, which would seem to indicate that this is a very hard puzzle indeed! However, any person who knows the first thing about sliding block puzzles should be able to solve it -assuming they can stay awake the full 17 hours it would take!

Because of this discouraging fact- that is, 6 competing standards, none of which are quite right, I would like to introduce a 7th, this one based on a theoretical simulation of a robot that knows the first thing about sliding block puzzles, but nothing else.

The BB Metric

a.k.a. attempting not to invoke the xkcd reference

Nick Baxter and I have been working on a metric which should better approximate the difficulty of getting from one point to another in a position graph. The basic idea is that the difficulty of getting from node A to node B in a graph is about the same as the average difficulty of getting from node A to node B in all spanning trees of the graph. However, finding the difficulty of getting to node A to node B in a tree is nontrivial, or at least so it would seem at first glance.

Suppose you’re at the entrance of a maze, and the maze goes on for far enough and is tall enough such that you can only see the paths immediately leading off from where you are. If you know that the maze is a tree (i.e, it has no loops), then a reasonable method might be to choose a random pathway, and traverse that part of the maze. If you return from that part to the original node, then that part doesn’t contain the goal node and you can choose another random pathway to traverse, making sure of course not to go down the same paths you’ve gone down before. (Note that to be sure that the goal node isn’t in a part of the maze, you need to go down all the paths twice, to walk down a path and then back up the path). For now, we take the difficulty of the maze to be the average number of paths you’ll need to walk on to get to the goal node or decide that the maze has no goal(counting paths you walk down and up on as +2 to the difficulty). Because of the fact that if the node you’re in has no descendant nodes which are the goal node you’ll need to go down all of the paths leading from that node twice, the difficulty of the part of the maze tree branching off from a node A can be calculated as

sum(a_i+2,i=1 to n) (eq. 1)

where n is the number of subnodes, and a_i is the difficulty of the ith subnode. Also, if the node A is on the solution path between the start and end nodes, then the difficulty of A can be calculated as

a_n+1+1/2 sum(a_i+2,i=1 to n-1) (eq. 2)

where a_n is assumed to be the subnode which leads to the goal. This basically states that on average that you’ll have to go down half of the subpaths and the path leading to the goal to get to the goal. Because root nodes are assumed to have 0 difficulty, you can work up from the bottom of the maze, filling in difficulties of nodes as you go up the tree. After the difficulty of the root node has been calculated, the length of the path between start and end nodes should be subtracted to give labyrinths (mazes with only a single path) a BB difficulty of 0.

Perhaps surprisingly, it turns out that using this scheme, the difficulty of a tree with one goal node is always measured as V-1-m, where V is the number of nodes in the tree (and V-1 is the number of edges, but this is not true for graphs) and m is the number of steps needed to get from the start node to the end node in the tree! Because of this, the difficulty of getting from one point to another in a graph under the BB metric is just the number of vertices, minus one, minus the average path length between the start and end nodes in the graph.

A few things to note (and a disclaimer): First of all, the actual graph of which positions can be reached in 1 action from each of the other positions actually depends on the type of move chosen, so the BB metric doesn’t really remedy the problem of the 6 competing standards! Secondly, the problem of computing the average path length between two points in a graph is really really hard to do quickly, especially because an algorithm which would also give you the maximum path length between two points in polynomial time would allow you to test if a graph has a Hamiltonian Path in polynomial time, and since the Hamiltonian Path problem is NP-Complete, you could also do the Traveling Salesman Problem, Knapsack Problem, Graph Coloring Problem, etc. in polynomial time! Lastly, I haven’t tested this metric on any actual puzzles yet, and I’m also not certain that nobody else has come up with the same difficulty metric. If anybody knows, please tell me!

One last note: Humans don’t actually walk down mazes by choosing random paths- usually it’s possible to see if a path dead-ends, and often people choose the path leading closest to the finish first, as well as a whole host of other techniques that people use when trying to escape from a maze. Walter D. Pullen, author of the excellent maze-making program Daedalus, has a big long list of things that make a maze difficult here. (Many of these factors could be implemented by just adding weights to eqns. 1 and 2 above)

Open Problems

a.k.a. things for an idling programmer to do

- What are the hardest simple simple simple 3×4 sliding block puzzles in different metrics? 2×8? 4×5? (Many, many popular sliding block puzzles fit in a 4×5 grid)

- How much of a difference do the hardest strict simple^3 puzzles have with the hardest simple^3 SBPs?

- How hard is it to search through all simple simple 4×4 SBPs? What about simple SBPs?

- (Robert Smith) Is there any importance to the dual of the graph induced by an SBP?

- Why hasn’t anybody found the hardest 15 puzzle position yet? (According to Karlemo and Östergård, only 1.3 TB would be required, which many large external hard drives today can hold! Unfortunately, there would be a lot of reading and writing to the hard disk, which would slow down the computation a bit.) (Or have they?)

- Why 132?

- What’s the hardest 2-piece simple sliding block puzzle in a square grid? Ziegler-Hunts & Ziegler-Hunts have shown a lower bound of 4n-16 for an nxn grid, n>6. How to do so isn’t too difficult, and is left as a puzzle for the reader.

- Is there a better metric for difficulty than the BB metric?

- Is there a better way to find different sliding block puzzle positions? (i.e, improve the fillboard algorithm?)

- Is it possible to tell if a SBP is solvable without searching through all possible positions? (This question was proposed in Martin Gardner’s article on Sliding Block Puzzles in the February 1964 issue of Scientific American)

- (Robert Smith) How do solutions of SBPs vary when we make an atomic change to the puzzle?

- Are 3-dimensional sliding block puzzles interesting?

- Would it be worthwhile to create an online database of sliding block puzzles based on the OEIS and as a sort of spiritual successor to Edward Hordern’s Sliding Piece Puzzles?

Sources

Ed Pegg, “Math Games: sliding-block Puzzles”, http://www.maa.org/editorial/mathgames/mathgames_12_13_04.html

James Stephens, “Sliding Block Puzzles”, http://puzzlebeast.com/slidingblock/index.html (see also the entire website)

Rob Stegmann, “Rob’s Puzzle Page: Sliding Puzzles”, http://www.robspuzzlepage.com/sliding.htm

Dries De Clerq, “Sliding Block Puzzles” http://puzzles.net23.net/

Neil Bickford, “SbpUtilities”, http://github.com/Nbickford/SbpUtilities

Jim Leonard, “JimSlide”, http://xuth.net/jimslide/

The Mysterious Tim of Analogbit, “Sliding Block Puzzle Solver”, http://analogbit.com/software/puzzletools

Walter D. Pullen, “Think Labyrinth!”, http://www.astrolog.org/labyrnth.htm

Donald Knuth, “SLIDING”, http://www-cs-staff.stanford.edu/~uno/programs/sliding.w

Martin Gardner, “The hypnotic fascination of sliding-block puzzles”, Scientific American, 210:122-130, 1964.

L.E. Hordern, “Sliding Piece Puzzles”, published 1986 Oxford University Press

Ivan Moscovich, “The Monty Hall Problem and Other Puzzles”

R.W. Karlemo, R. J. Östergård, “On Sliding Block Puzzles”, http://citeseerx.ist.psu.edu/viewdoc/summary?doi=10.1.1.55.7558

Robert Hearn, “Games, Puzzles, and Computation”, http://groups.csail.mit.edu/mac/users/bob/hearn-thesis-final.pdf

Ruben Grønning Spaans, “Improving sliding-block puzzle solvingusing meta-level reasoning”, http://daim.idi.ntnu.no/masteroppgave?id=5516

John Tromp and Rudi Cilibrasi, “”Limits on Rush Hour Logic Complexity”, arxiv.org/pdf/cs/0502068

David Singmaster et al., “Sliding Block Circular”, www.g4g4.com/pMyCD5/PUZZLES/SLIDING/SLIDE1.DOC

Thinkfun & Mark Engelberg, “The Inside Story of How We Created 2500 Great Rush Hour Challenges”, http://www.thinkfun.com/microsite/rushhour/creating2500challenges

Ghaith Tarawneh, “Rush Hour [Game AI]”, http://black-extruder.net/blog/rush-hour-game-ai.htm

Any answers to questions or rebukes of the data? Post a comment or email the author at (rot13) grpuvr314@tznvy.pbz